For the Bachelor Thesis at the end of the third year studying Design and Product Realization at KTH, I teamed up with Stanislav Minko to build an autonomous vehicle capable of lane-keeping. The idea is inspired by the Tesla Model S, which I had the chance to try driving when going up to Åre for a snowboard trip. This was our first project of this complexity, as most previous course work merely included building non-functional prototypes. We learnt many new skills including prototyping with electronics, implementing control system loops, LaTeX, basic Git, and lots of troubleshooting. For a quick overview, you can watch the video above (skip to 4:12 to see it driving) and read on, or you can read the full report!

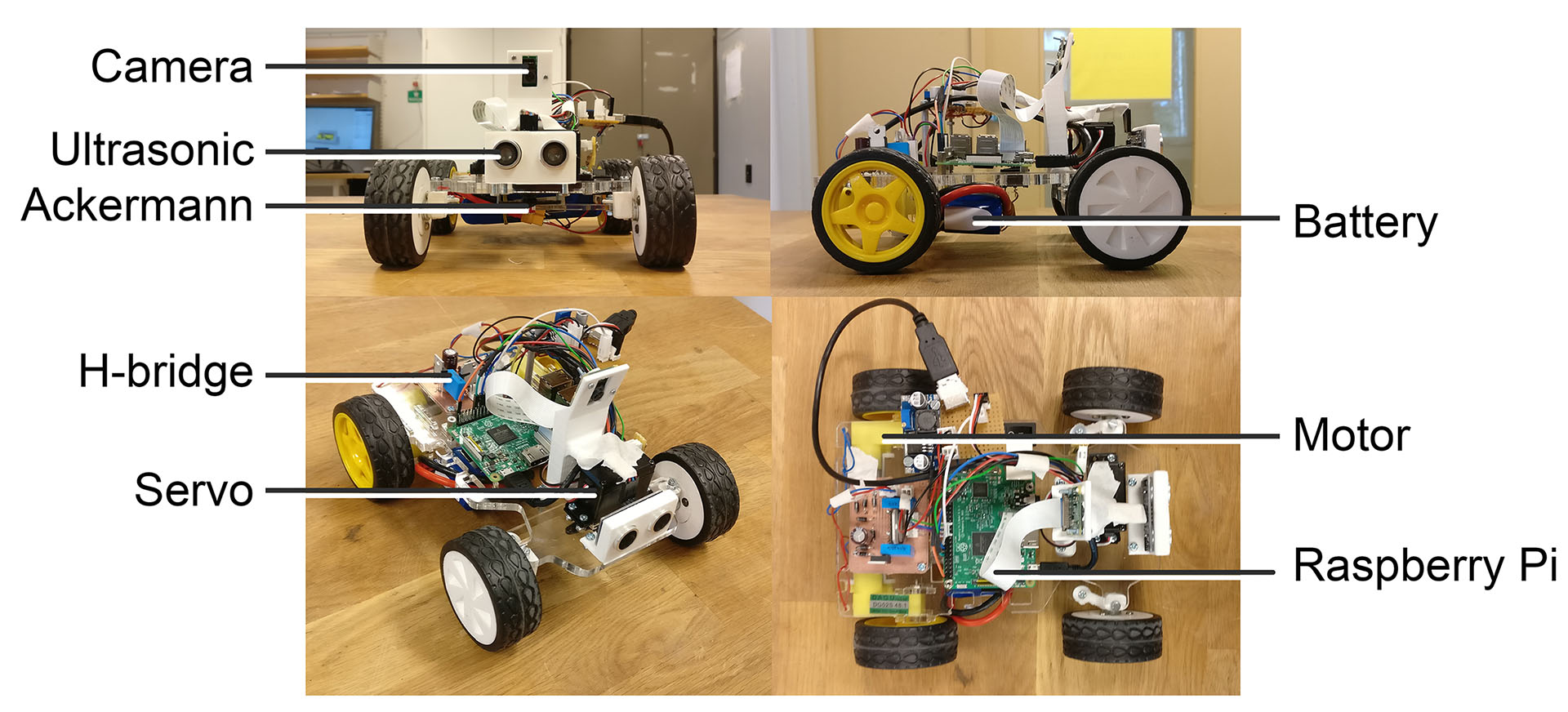

The finished demonstrator.

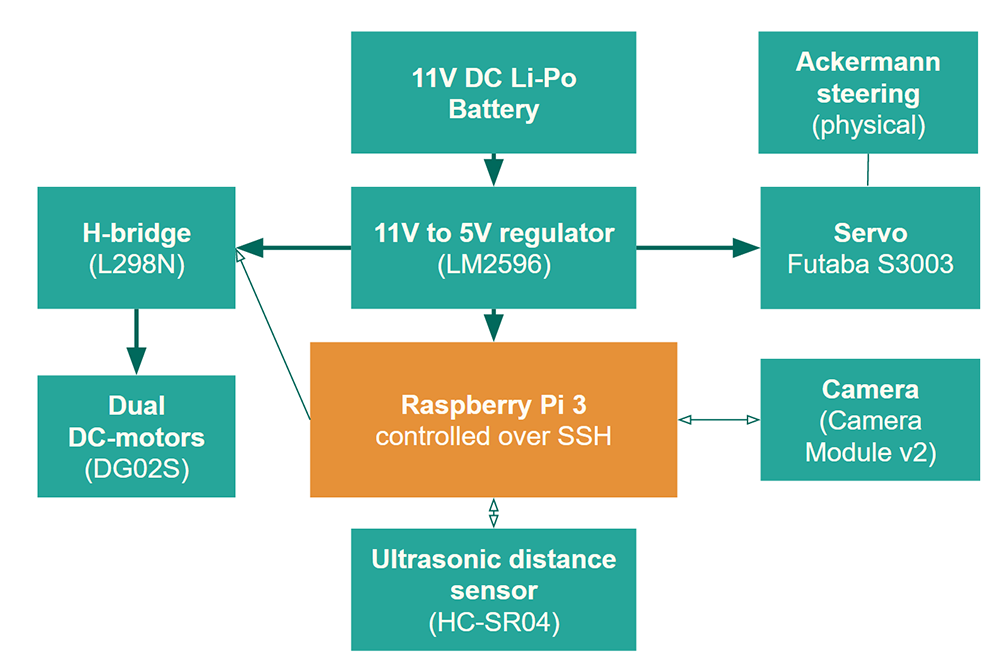

After formulating a clear goal with our project, we made a plan for how to build the demonstrator. Since the real-time image processing requires some processing power, we decided to build around the Raspberry Pi 3. A Li-Po battery connected to a switching regulator powers the microcontroller, as well as other hardware. An H-bridge is used to control the motors. We never used it, but the setup is cable of steering the back wheels independently, as well as reversing their rotation. A positional servo is used to steer the two front wheels. The vehicle acts upon input from the ultrasonic distance sensor (for emergency breaking) and, of course, the camera which detects the lane markings.

Once the hardware was assembled, the software had to be implemented. We decided to use Python and the OpenCV package for computer vision. In short, an image is captured, any edges in the image are detected (Canny) and linked into lines (Hough). These lines are sorted into potential left and right lane markings if certain criteria regarding line slope and position are fulfilled. If at any point, one of the lane markings is not found, the last known location is used. This means that our system is capable of handling temporarily obscured or dashed lane markings.

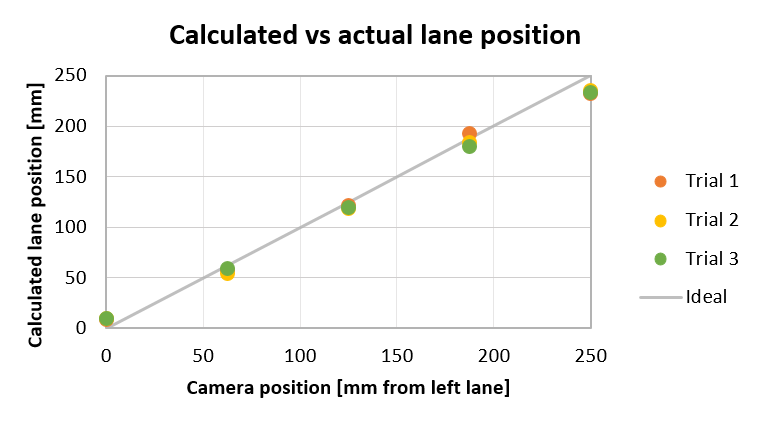

The midpoint between the intersections of these lines with the bottom of the image, compared to the middle of the camera's field of view, is fed as a positional error into a PID-regulator. The output from the PID is used to set the servo position. This process is repeated at roughly 15 frames per second. As seen below, the method we developed is quite accurate for determining the vehicle's lane position.

Accuracy of lane position detection.

Under decent conditions, our demonstrator is able to keep within the lane markings, even through curves (see 4:12 in the video at the top of this page). The dips in frame rate, reflective glare from the floor (which is arguably not a problem when driving on asphalt or concrete in the real world), and a narrow field of view limit the performance of the vehicle's lane-keeping. The project can be directly expanded upon by using the ultrasonic distance sensor for distance keeping, switching to a wide-angle camera, and working out what is causing the frame rate dips. Many advanced systems also use LIDAR to build a 3D-representation of obstacles near the vehicle. More complex image recognition techniques could be used to track other cars.

Read the full bachelor thesis (pdf)